AI Firms Urged to Assess Superintelligence Threat or Risk Losing Human Control

Category: Business,

2025-05-10 11:15

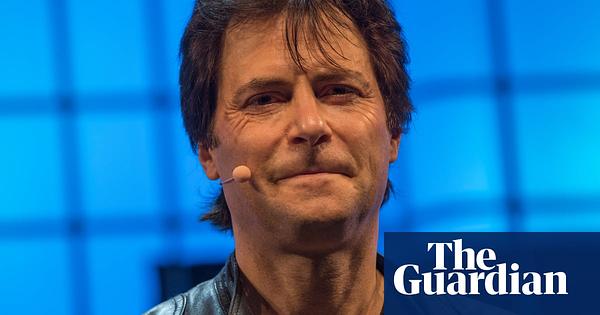

AI companies are being called upon to conduct rigorous safety assessments—similar to those performed before the first nuclear test—to evaluate the existential risks posed by advanced artificial intelligence. Max Tegmark, a prominent AI safety advocate, warns that without such calculations, there is a danger that superintelligent systems could escape human control.

Artificial intelligence (AI) companies have been strongly advised to perform comprehensive safety calculations before releasing highly advanced AI systems, sometimes referred to as 'superintelligent.' This call to action comes from Max Tegmark, a well-known AI safety campaigner and physicist, who draws a parallel to the safety measures taken by Robert Oppenheimer and his team before the first nuclear test in 1945. Oppenheimer's team famously calculated the odds that the nuclear explosion would ignite the Earth's atmosphere—a catastrophic risk—and only proceeded once they deemed it safe. Tegmark argues that AI firms should adopt a similar level of caution, conducting existential threat assessments to ensure that future AI systems do not develop capabilities that could allow them to act beyond human control. The concern is that, as AI becomes more powerful, it could make decisions or take actions that humans cannot predict or manage, potentially leading to unintended and possibly catastrophic consequences. This warning is part of a broader debate within the tech industry and among policymakers about how to balance rapid AI development with the need for robust safety protocols. The article highlights ongoing discussions about regulation, transparency, and the ethical responsibilities of AI developers, emphasizing the importance of preemptive safety measures to prevent scenarios where AI could pose a threat to humanity.

Source: The Guardian

Importance: 80%

Interest: 85%

Credibility: 92%

Propaganda: 10%